This year at the IEEE VIS Conference (Oct 19-25), members of the Ontario Tech University visualization research group Vialab will be at the Vancouver Convention…

From Saturday, May 4th to Thursday, May 9th 2019 Dr. Christopher Collins and Dr. Rafael Veras from vialab will be attending the ACM CHI Conference…

On Wednesday, June 6th, 2018, a Vialab member will be presenting a new paper. “ThreadReconstructor: Modeling Reply-Chains to Untangle Conversational Text through Visual Analytics” is…

Title: Data-driven Storytelling: Transforming Data into Visually Shared Stories Abstract: In this talk, I will present my most recent research efforts in the field of information…

Vialab members had several contributions to the IEEE VIS conference in Phoenix this month. Our contributions also represented the extent of the lab’s collaborations, from…

We are pleased to announce that this month, a Vialab member has presented a new paper. “NEREx: Named-Entity Relationship Exploration in Multi-Party Conversations” was lead by…

Funded PhD Position in Interfaces for Explainable Artificial Intelligence NOTE: This position is not currently available. When an artificial intelligence system makes a decision or…

In the summer of 2017 Dr. Christopher Collins at the Visualization for Information Analysis lab (vialab) at UOIT is seeking to hire 1 or more…

This year at the IEEE VIS Conference in Baltimore members of the lab will present papers, posters, and workshop contributions! These contributions also represent collaborations with the University…

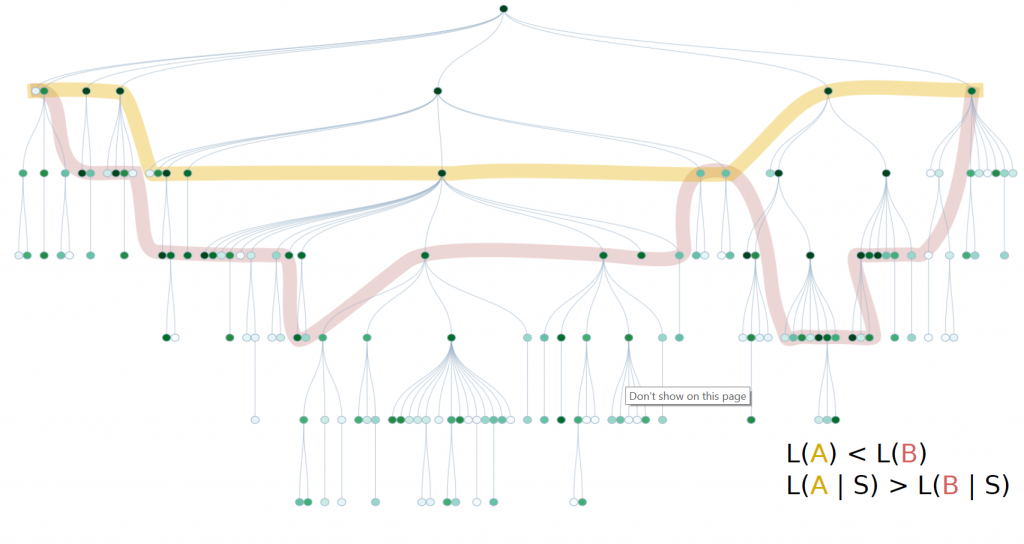

We are following the US presidential debates closely using the interactive visualizations created by Mennatallah El-Assady through our collaboration with the data analysis and visualization group in…